Packet loss occurs when one or more packets of data travelling across a computer network fail to reach their destination. Packet loss is typically caused by network congestion. Packet loss is measured as a percentage of packets lost with respect to packets sent.

The Transmission Control Protocol (TCP) detects packet loss and performs retransmissions to ensure reliable messaging. Packet loss in a TCP connection is also used to avoid congestion and reduces throughput of the connection.

In streaming media and online game applications, packet loss can affect the user experience.

Causes

Packet loss is typically caused by network congestion. When content arrives for a sustained period at a given router or network segment at a rate greater than it is possible to send through, then there is no other option than to drop packets. If a single router or link is constraining the capacity of the complete travel path or of network travel in general, it is known as a bottleneck.

Packet loss can be caused by a number of other factors that can corrupt or lose packets in transit, such as radio signals that are too weak due to distance or multi-path fading (in radio transmission), faulty networking hardware, or faulty network drivers. Packets are also intentionally dropped by normal routing routines (such as Dynamic Source Routing in ad hoc networks, ) and through network dissuasion technique for operational management purposes.

Effects

Packet loss can reduce throughput for a given sender, whether unintentionally due to network malfunction, or intentionally as a means to balance available bandwidth between multiple senders when a given router or network link reaches nears its maximum capacity.

When reliable delivery is necessary, packet loss increases latency due to additional time needed for retransmission. Assuming no retransmission, packets experiencing the worst delays might be preferentially dropped (depending on the queuing discipline used) resulting in lower latency overall at the price of data loss.

During typical network congestion, not all packets in a stream are dropped. This means that undropped packets will arrive with low latency compared to retransmitted packets, which arrive with high latency. Not only do the retransmitted packets have to travel part of the way twice, but the sender will not realize the packet has been dropped until it either fails to receive acknowledgement of receipt in the expected order, or fails to receive acknowledgement for a long enough time that it assumes the packet has been dropped as opposed to merely delayed.

Measurement

Packet loss may be measured as frame loss rate defined as the percentage of frames that should have been forwarded by a network but were not.

Acceptable packet loss

Packet loss is closely associated with quality of service considerations, and is related to the erlang unit of measure.

The amount of packet loss that is acceptable depends on the type of data being sent. For example, for Voice over IP traffic, one commentator reckoned that "[m]issing one or two packets every now and then will not affect the quality of the conversation. Losses between 5% and 10% of the total packet stream will affect the quality significantly." Another described less than 1% packet loss as "good" for streaming audio or video, and 1-2.5% as "acceptable".[7] On the other hand, when transmitting a text document or web page, a single dropped packet could result in losing part of the file, which is why a reliable delivery protocol would be used for this purpose (to retransmit dropped packets).

Diagnosis

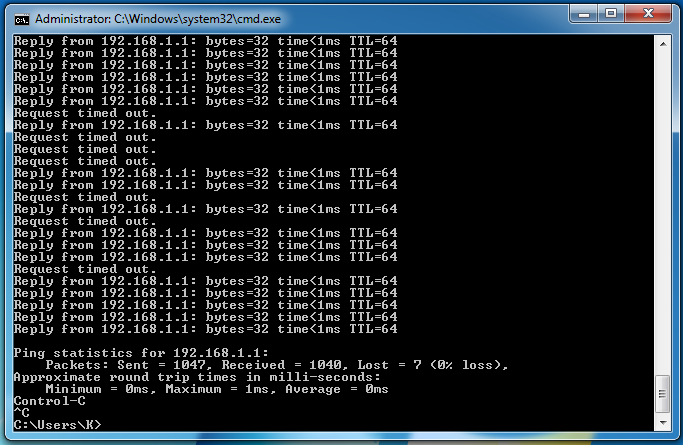

Packet loss is detected by application protocols such as TCP, but when a person such as a network administrator needs to detect and diagnose packet loss, they typically use a purpose-built tool. Many routers have status pages or logs, where the owner can find the number or percentage of packets dropped over a particular period.

For remote detection and diagnosis, the Internet Control Message Protocol provides an "echo" functionality, where a special packet is transmitted that always produces a reply after a certain number of network hops, from whichever node has just received it. Tools such as ping, traceroute, and MTR use this protocol to provide a visual representation of the path packets are taking, and to measure packet loss at each hop.

In some cases, these tools may indicate drops for packets that are terminating in a small number of hops, but not those making it to the destination. For example, routers may give echoing of ICMP packets low priority and drop them preferentially in favor of spending resources on genuine data; this is generally considered an artifact of testing and can be ignored in favor of end-to-end results.

Rationale

The Internet Protocol (IP) is designed according to the end-to-end principle as a best-effort delivery service, with the intention of keeping the logic routers must implement as simple as possible. If the network made reliable delivery guarantees on its own, that would require store and forward infrastructure, where each router devoted a significant amount of storage space to packets while it waited to verify that the next node properly received it. A reliable network would not be able to maintain its delivery guarantees in the event of a router failure. Reliability is also not needed for all applications. For example, with a live audio stream, it is more important to deliver recent packets quickly than to ensure that stale packets are eventually delivered. An application may also decide to retry an operation that is taking a long time, in which case another set of packets will be added to the burden of delivering the original set. Such a network might also need a command and control protocol for congestion management, adding even more complexity.

To avoid all of these problems, the Internet Protocol allows for routers to simply drop packets if the router or a network segment is too busy to deliver the data in a timely fashion, or if the IPv4 header checksum indicates the packet has been corrupted. Obviously this is not ideal for speedy and efficient transmission of data, and is not expected to happen in an uncongested network. Dropping of packets acts as an implicit signal that the network is congested, and may cause senders to reduce the amount of bandwidth consumed, or attempt to find another path. For example, the Transmission Control Protocol (TCP) is designed so that excessive packet loss will cause the sender to throttle back and stop flooding the bottleneck point with data (using perceived packet loss as feedback to discover congestion).

Packet recovery for reliable delivery

The Internet Protocol leaves responsibility for any retransmission of dropped packets, or "packet recovery" to the endpoints - the computers sending and receiving the data. They are in the best position to decide whether retransmission is necessary, because the application sending the data should know whether speed is more important than reliability, whether a message should be re-attempted in whole or in part, whether or not the need to send the message has passed, and how to vary the amount of bandwidth consumed to account for any congestion.

Some network transport protocols such as TCP provide endpoints an easy way to ensure reliable delivery of packets, so that individual applications don't need to implement logic for this themselves. In the event of packet loss, the receiver asks for retransmission or the sender automatically resends any segments that have not been acknowledged. Although TCP can recover from packet loss, retransmitting missing packets causes the throughput of the connection to decrease. This drop in throughput is due to the sliding window protocols used for acknowledgment of received packets. In certain variants of TCP, if a transmitted packet is lost, it will be re-sent along with every packet that had been sent after it. This retransmission causes the overall throughput of the connection to drop.

Protocols such as User Datagram Protocol (UDP) provide no recovery for lost packets. Applications that use UDP are expected to define their own mechanisms for handling packet loss.

Impact of queuing discipline

Main article: Queuing discipline

There are many methods used for determining which packets to drop. Most basic networking equipment will use FIFO queuing for packets waiting to go through the bottleneck and they will drop the packet if the queue is full at the time the packet is received. This type of packet dropping is called tail drop. However, dropping packets when the queue is full is undesirable for any connection that requires real-time throughput. In cases where quality of service is rate limiting a connection, packets may be intentionally dropped in order to slow down specific services to ensure available bandwidth for other services marked with higher importance (like those used in the leaky bucket algorithm). For this reason, packet loss is not necessarily an indication of poor connection reliability or a bottleneck.